The System Inside the System

Announcing two new Ruby gems: vsm and airb

So there I was, reading The Unaccountability Machine like apparently everyone else on my timeline, when something clicked. That uncomfortable click of recognizing a pattern you've been unconsciously building toward for months.

Dan Davies was explaining cybernetics and Stafford Beer's theories about organizational dysfunction, and I'm sitting there with this growing sense of... recognition? Like when you're struggling to remember a song and someone finally names it for you.

The Loop That Was Already There

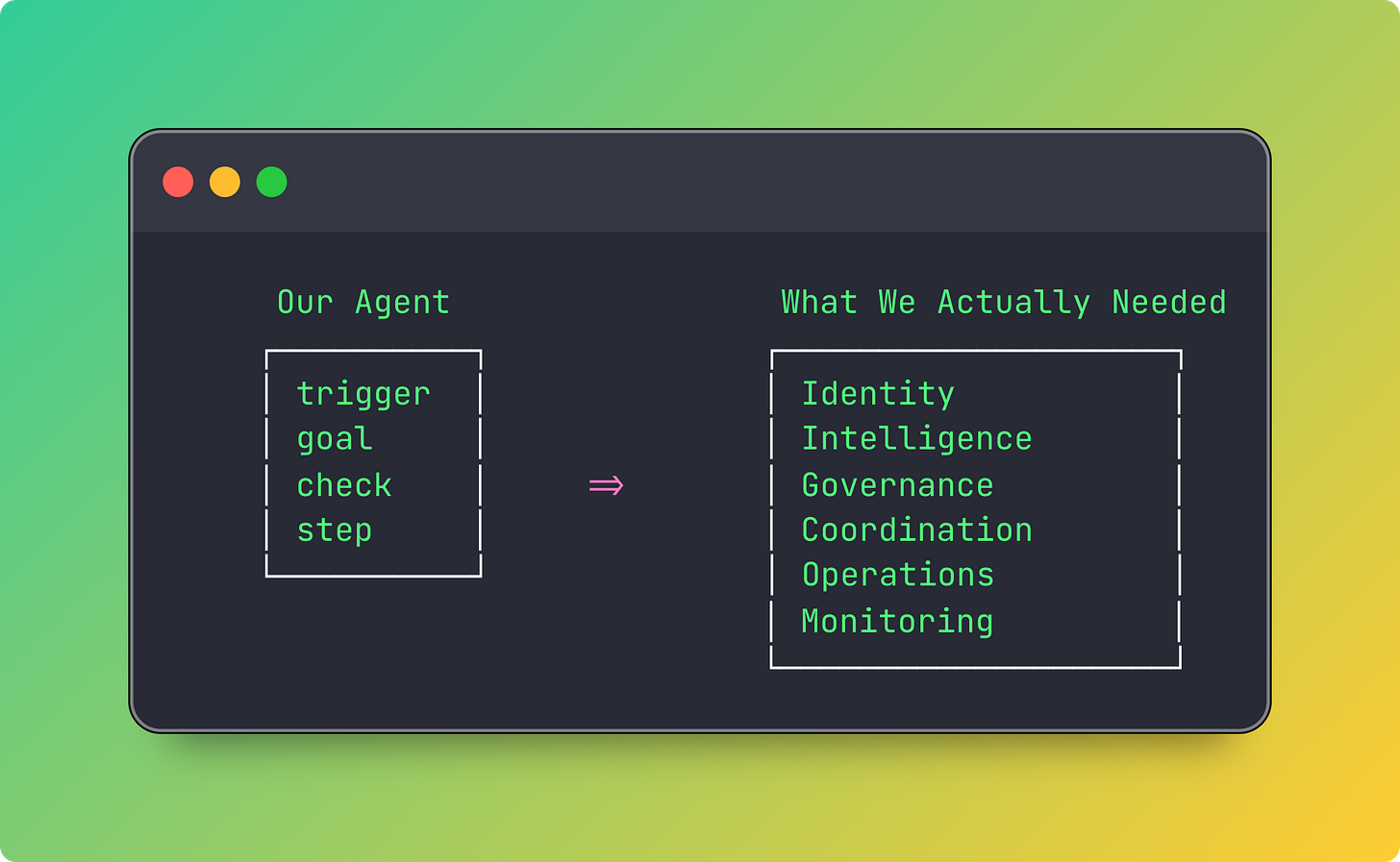

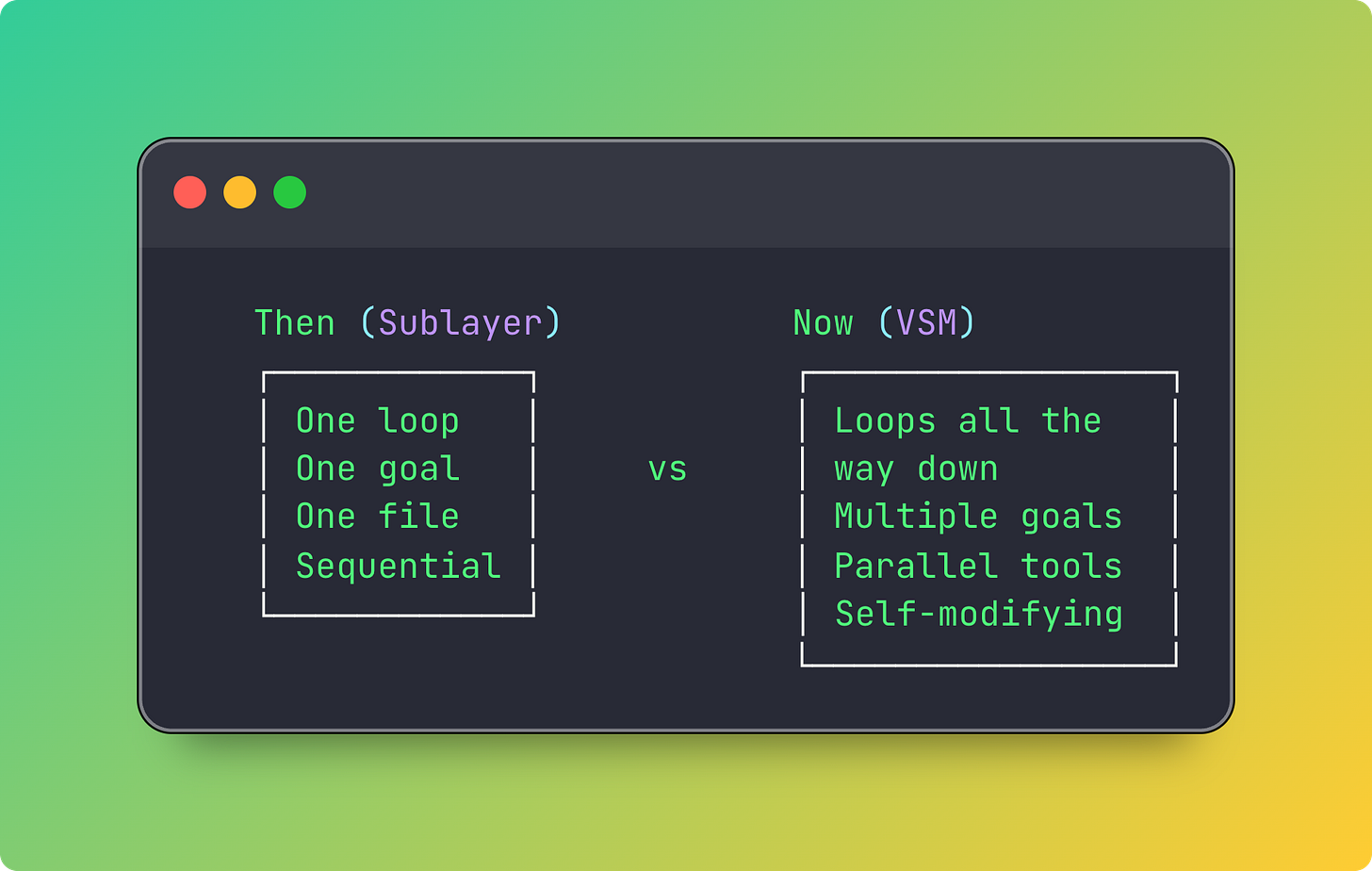

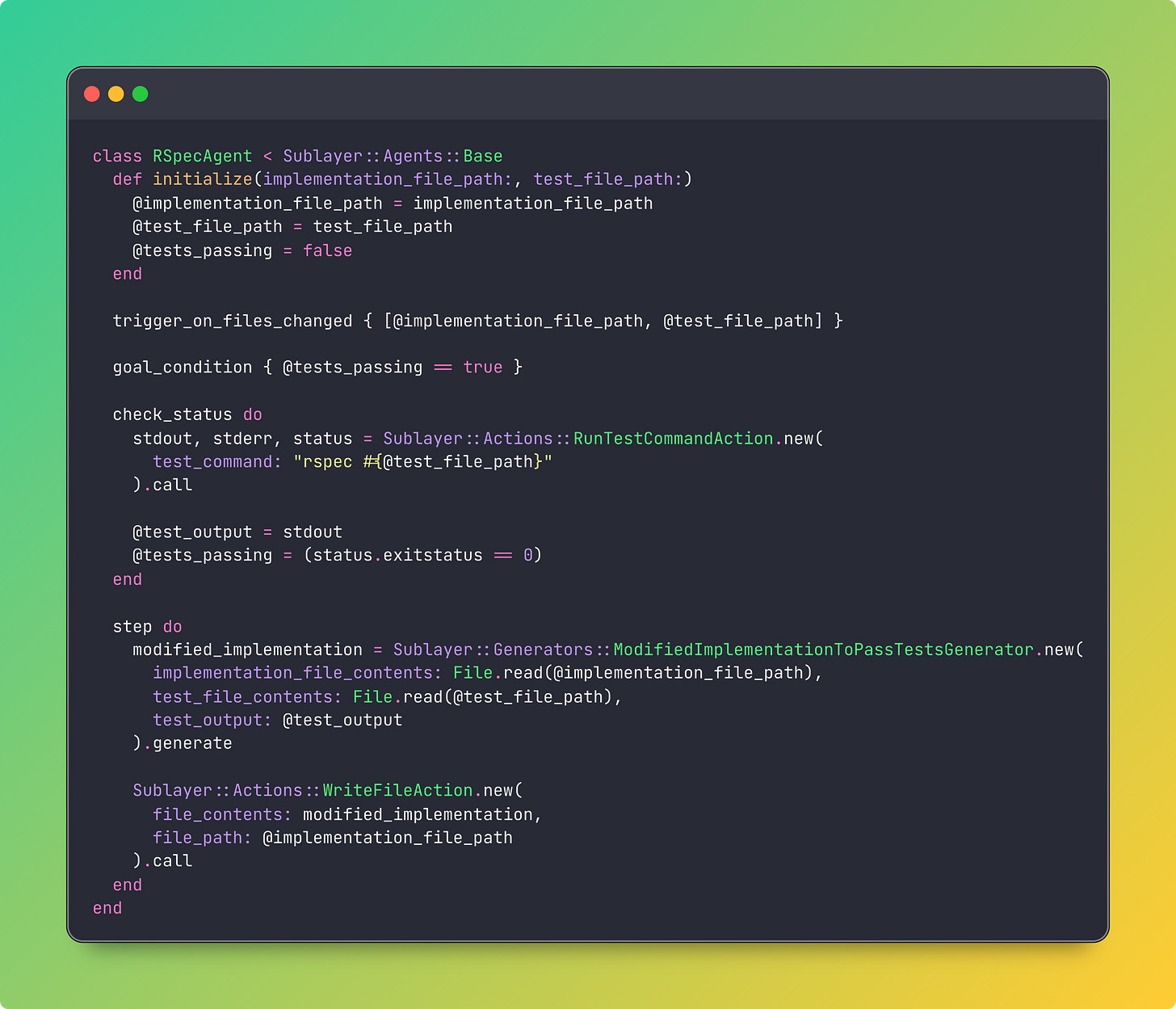

See, we'd been exploring this idea for an agent abstraction at Sublayer. Simple feedback loops with an LLM in the middle:

Look at that. We had triggers, goals, status checks, and steps. A primitive feedback loop, sure, but it was... something. The agent would watch, check, act, repeat. Like a very determined but slightly dim robot trying to solve a Rubik's cube.

But reading about Beer's Viable System Model, I realized we'd built the equivalent of a nervous system without organs. We had reflexes but no brain, no governance, no ability to understand context beyond "tests pass" or "tests fail."

A Brief Detour Through Corporate Dysfunction

The Unaccountability Machine is primarily about why large organizations make terrible decisions. But it’s also about information flow, feedback loops, and how systems regulate themselves (or don't).

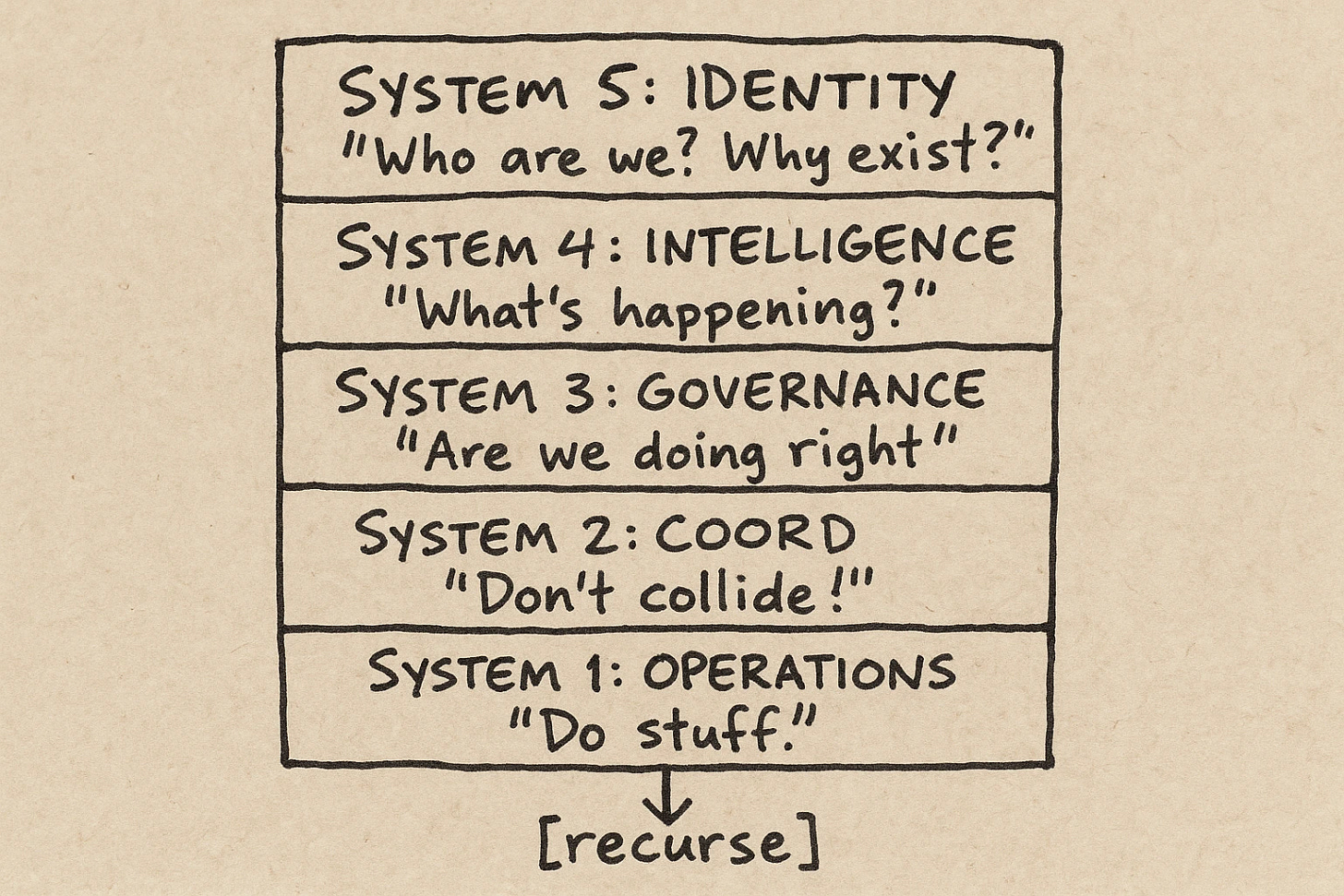

Beer figured out that viable systems (systems that survive and thrive) have this recursive structure. Five subsystems, each with a specific role, and here's the kicker: each subsystem can contain the entire structure again. It's organizational theory meets fractal geometry meets... well, meets software architecture.

I drew this on a napkin at 2 AM. Then I started drawing it again in code. Then I realized I was building something interesting.

I’m actually building a few interesting things. You may have read a couple posts where I talk about our gaming-inspired command center for working with swarms of agents called APM. We’re setting up a more formal beta soon and if you’re interested in joining or following along, sign up on the site at actionsperminute.io

Ruby: The Language That Lets You Change the Rules While Playing

You know what's wild about Ruby? We've had this incredibly dynamic runtime for decades, and mostly we've used it to make web apps with nice syntax. It's like having a sports car and only driving it to get groceries.

Ruby lets you do things that would make static language developers wake up in a cold sweat:

Sometimes metaprogramming is dismissed as just being used for the sake of being clever, but this is exactly the kind of runtime flexibility that makes self-modifying systems possible. The kind that would let an AI agent literally write its own capabilities while it's running.

Remember When Rails Changed Everything?

<tangent> I remember the before times. Enterprise Java beans. XML configuration files that configured other XML files. Building a simple CRUD app was a three-week expedition into the heart of darkness.

Then DHH shows up with this 15-minute blog demo, and suddenly everyone could build Basecamp clones in their spare time. Rails took MVC and made the right things easy and the wrong things hard. </tangent>

I keep thinking about that moment. What's the Rails moment for AI agents?

We're all out here stringing together API calls, managing conversation state, handling tool calls, building our own janky routers for messages. It feels exactly like web development did in 2003. So much plumbing, so little actual problem-solving.

What if there was a framework that just... understood how agents should be organized? That made the right patterns obvious?

The Missing Pieces

Looking back at that RSpec agent, I could see what was missing:

We had built a reflex. What we needed was a nervous system.

The Cybernetics Revelation

Here's what clicked when I was reading Davies: the VSM is a blueprint for systems that can regulate themselves, adapt, and (this is the important part) contain other instances of themselves.

Think about what an AI agent actually needs:

Identity: Know what you're supposed to be doing (our goal_condition, but richer)

Intelligence: Understand what's happening in your environment (beyond just test output)

Governance: Have policies and limits (please don't delete ~/)

Coordination: Manage parallel operations without chaos (when you have multiple agents)

Operations: Actually do stuff like read files, write code, call APIs (our step, but composable)

Each tool an agent uses could itself be an agent with the same structure. Your file editor can be a full system with its own intelligence about how to safely modify code. Your test runner can reason about what tests to run and why.

Recursion all the way down. Or up. Depending on your perspective.

The Thing Nobody Asked For But I Built Anyway

So I'm sitting there, wired on coffee and cybernetics theory, thinking: what if we actually built this?

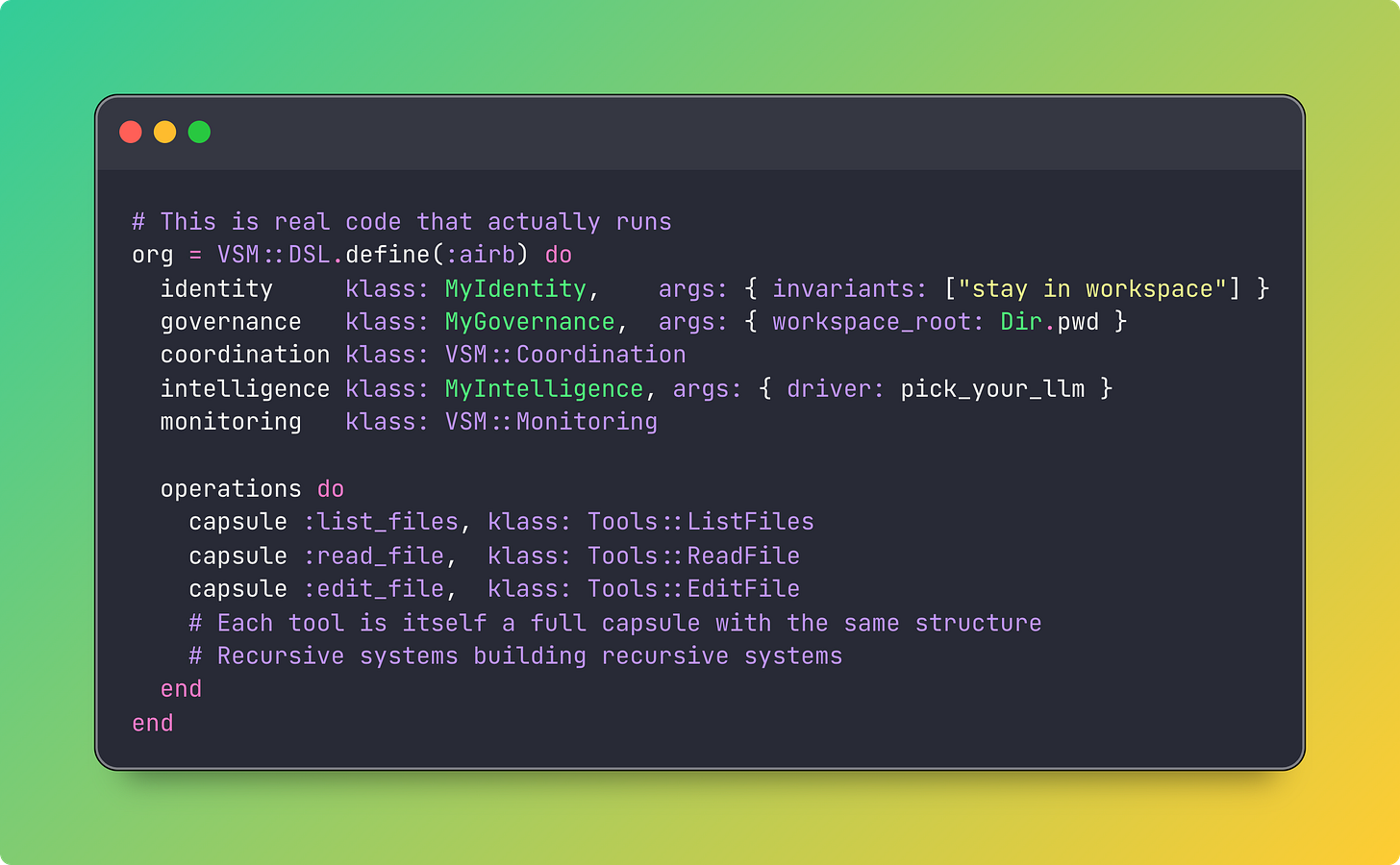

Not another agent framework that's just prompt templates and API wrappers. But something that embraces the recursive nature of intelligence itself. Something that leans into Ruby's unique capabilities.

Look at that. It's almost... declarative. You're not writing the plumbing; you're describing the structure and letting the framework handle the chaos.

So… I Built Two Things

vsm: A Ruby gem that implements a version of Beer's Viable System Model as a framework for building self-contained, recursive systems that can act as AI agents. Message-driven, async-first, with built-in observability. It knows how to talk to OpenAI, Anthropic, and Gemini so far, but more importantly, it knows how to organize capabilities recursively.

airb: A CLI coding agent built on top of VSM. Right now it reads files and makes edits, the bare minimum of usefulness. But because it's built on VSM, it's actually a foundation for something more ambitious. Something that could spawn specialized sub-agents for different domains. Something that could modify its own capabilities based on what it learns.

The interesting part isn't what these do today. It's what they make possible tomorrow.

The Question

Is this it? Is VSM the abstraction that makes building AI agents as straightforward as building web apps?

Probably not. But maybe it's a step in that direction. Maybe it's about exploring what happens when we think about agents as systems that contain systems. Maybe Ruby's dynamic nature is exactly what we need for building systems that can evolve.

Looking at our old RSpec agent now, it seems almost quaint. Like looking at a Model T after you've seen a Tesla. Sure, they both move forward, but one has a lot more going on under the hood.

Or maybe I just spent too much time reading cybernetics theory and built an over-engineered framework for something that could have been a simple script.

But you know what? At least now when my agent fails, it fails with structure. It fails with governance and coordination and properly correlated message IDs. It fails with style.

The Future Is Unevenly Distributed (But the Gems Are on RubyGems)

We're at this moment where LLMs are powerful enough to use the kind of runtime flexibility that Ruby has always offered but many have been too scared to fully embrace. Self-modifying code is now a feature. Metaprogramming isn't showing off, it's necessary.

Maybe the next generation of AI agents will need to be built in Ruby.

Maybe the future of AI is less about making the models bigger and more about making the systems that use them more capable of organizing themselves.

Or maybe I've just been reading too much cybernetics theory.

Either way, the code's out there now. Let's see what happens when we give AI agents the ability to build themselves.

If you're wondering whether this could actually build something as powerful as Claude Code or Cursor or AMP or Zed or whatever the hot thing is by the time you read this... well, that's the thing about Ruby. It makes it easy for you to try something and find out.