The Hackathon Where We Mostly Just Talked

Architectural Control at Agent Velocity

A few weeks ago Justin and I went to a hackathon at Google. Not a “use our cloud API” hackathon. A “we literally shipped a small language model with Chrome and you can use it locally” hackathon.

Let me say that again: Chrome. Has. An. LLM. Built. In.

The same browser that you’re probably reading this post in is now shipping with a language model. They call it “Built-in AI” and they’re giving developers APIs to do inference locally. No server calls. No API keys. No rate limits. Just you, the browser, and a surprisingly capable small model that can understand images and generate text right there on your machine.

The Hackathon (or: Two People Having a Very Long Conversation)

Justin and I had a problem we wanted to explore: what if you could navigate the latent space of image generation without writing prompts?

Every image generator (Midjourney, DALL-E, Nano Banana, Stable Diffusion, etc) all work the same way. You write increasingly elaborate prompts. “A cat” becomes “a tabby cat sitting” becomes “a tabby cat sitting on a velvet cushion in the style of a renaissance painting, dramatic lighting, 8k, trending on artstation.”

It’s prompt engineering as an art form. Which is fine! Except it’s also kind of exhausting. And it makes exploring variations feel like playing hot-and-cold with a very literal robot.

What if instead of describing what you want, you just… adjusted sliders? Abstract attributes with values from 1-10. Not “add more blue” but something like “make it 7/10 mysterious instead of 4/10 mysterious.”

That was the question we wanted to answer.

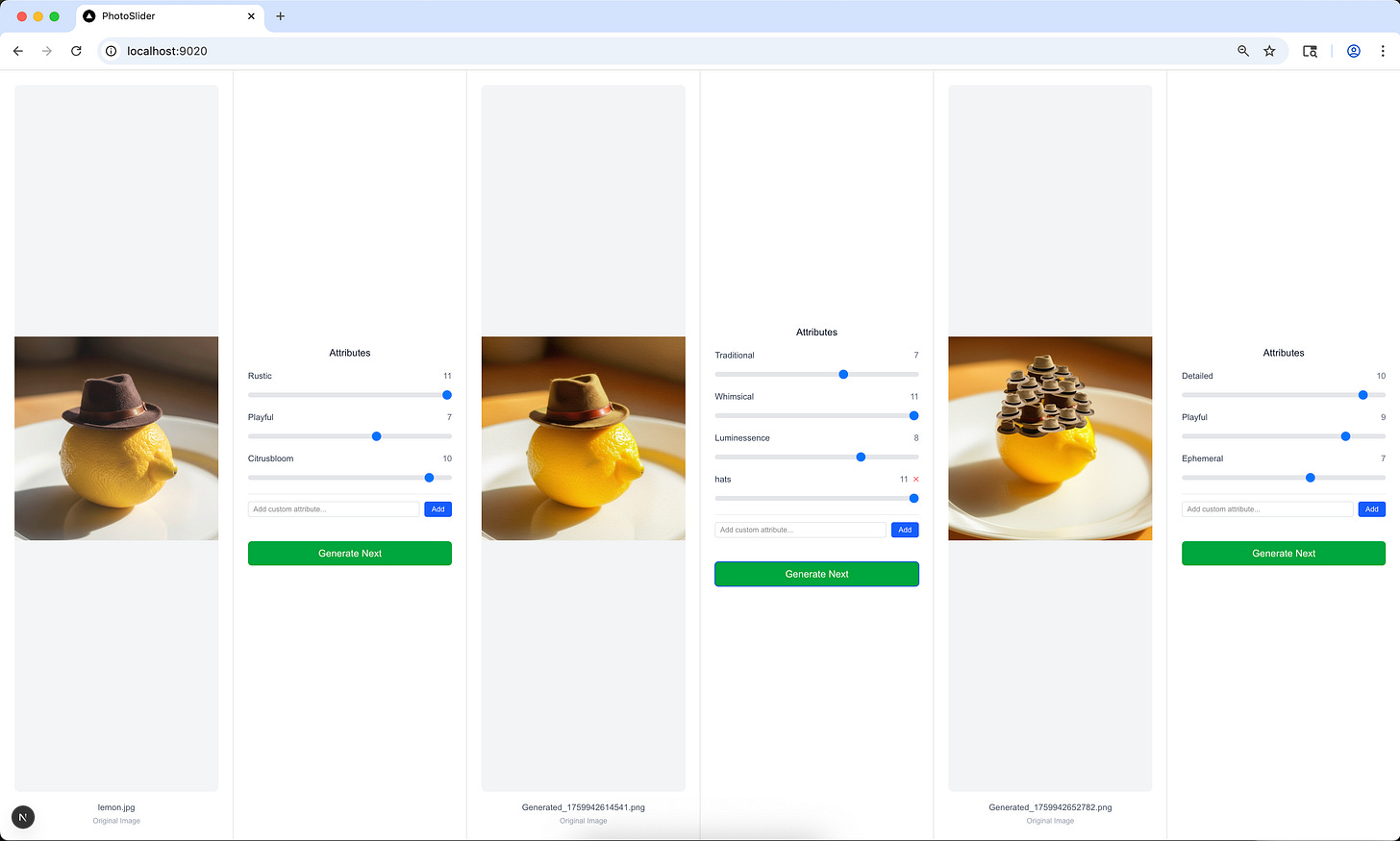

So we built Photoslider.

The Thing We Built

Here’s how it works:

You upload an image

Chrome’s Built-in AI analyzes it and returns three attributes with scores from 1-10. Basically, just let the AI decide what’s interesting about your image. One time it gave us “Geometric”, “Fragmented”, and “Luminous”. Another time it was “Mystery”, “Brightness", and “Motion.”

You get sliders for each attribute

You adjust them however you want (more geometric? less fragmented? crank that luminous to 11!)

You can also create your own custom attributes if the AI’s don’t capture what you want to change

Click “Generate Next”

The differences between the original and new values become a prompt (“increase geometric by 3 levels, decrease fragmented by 2 levels, add 4 levels of luminous”)

That prompt plus your original image goes to Gemini’s image generator.

New image comes back

You can do it all over again, or branch off from any previous image in your history

It’s kind of like being a DJ for images. Or maybe more like those sci-fi movies where people adjust holograms with hand gestures, except with mouse clicks and vibes.

The code is available up on GitHub at sublayerapp/photoslider.

But there’s a big reason why I’m telling you all this: we didn’t really code it.

The Actual Hackathon Experience

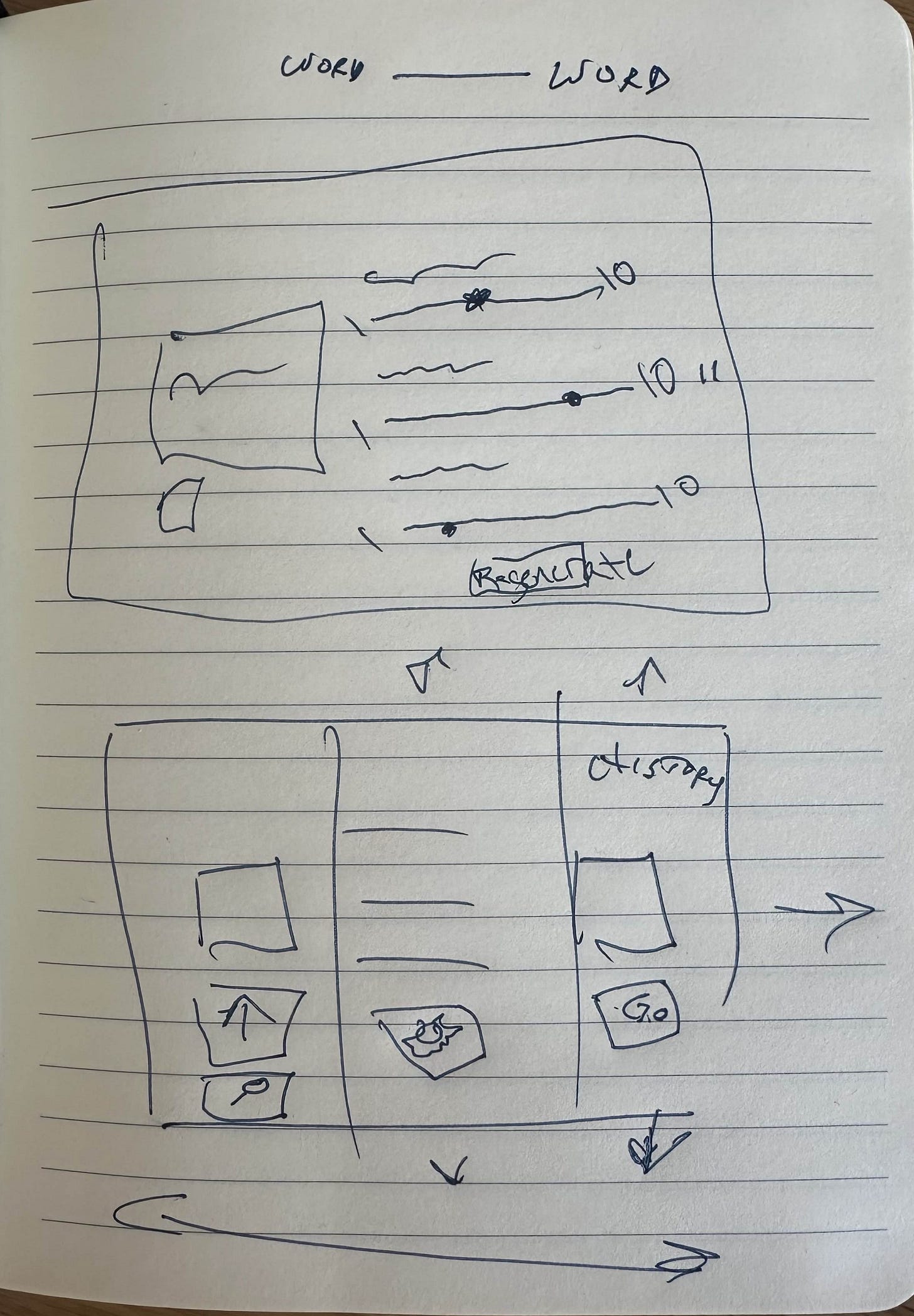

We spent maybe 95% of our time sketching ideas in a notepad, arguing about whether there should be 3 or 5 attributes, and describing to Gemini CLI what we wanted to build.

The AI agent wrote the code.

All of it.

We’d say things like “okay so when the user adjusts a slider, we need to calculate the diff from the original values, then turn those diffs into natural language describing the change, then include the original image and send it all to Gemini.”

And then there would be code. Sometimes it worked. Sometimes we’d test it and say “no, that’s not quite right, here’s the page from the documentation that the Google team shared with us about the API, do it this way.” And it would fix it.

We were kind of pair programming, but the “typing code” half of the pair was a machine.

I know no one likes the 2am debugging session. The desperate Stack Overflow searches. The moment when your teammate who actually knows React wakes up and saves everyone. The caffeine-fueled sprint to get something working before the deadline.

We had coffee. But we didn’t really debug. We didn’t frantically Google. We mostly just… talked. About what we wanted. About whether the interface made sense. About what should happen when you branch off from a previous image. Should it remember its history or start fresh?

Even though I’ve been neck deep in agentic coding for months now, the ratio felt wrong. Too much output for the input. Too much progress for the effort expended.

I kept waiting for the other shoe to drop. For the part where we’d have to actually roll up our sleeves and fix something the AI couldn’t figure out.

It never really came.

The Implication

This whole experience has me thinking about what hackathons even are anymore.

Hackathons have always been about speed and delivery under time pressure. That part doesn’t change. The clock is still ticking. The deadline is still real. You still have to ship something that works.

What changes is what breaks when you move fast.

See, when you’re typing code yourself, moving fast can mean bugs. Typos. Off-by-one errors. Forgetting to handle edge cases. The things that break are small and fixable if you’re good.

But when an AI agent is writing your code? Moving fast can mean spaghetti.

The agent will do exactly what you tell it to do. At a high level of skill. Right now. Without arguing.

And if you haven’t thought about architecture? If you haven’t set up the bones of the thing correctly? If you’re just saying “add this feature” and “now add these other features” without thinking about how they fit together?

You end up with a mess. Code that works until it doesn’t. Features that sort of integrate but not really. A codebase that the agent itself starts getting confused by.

It’s a different kind of pressure. Not “debug before time runs out” but “stay coherent while building at 10x normal velocity.”

I keep coming back to cooking shows, but for different reasons now.

Iron Chef. Chopped. The Great British Baking Show.

They’re not testing whether you can cook. Everyone can cook. They’re testing whether you can:

Make architectural decisions under pressure (do you have time for a three-layer cake or should you pivot to cupcakes?)

Adapt when a twist comes (the ingredient you planned around is suddenly off-limits)

Maintain coherence while moving fast (does your dish still make sense as a whole?)

Work within the affordances of your tools (some techniques work in 90 minutes, some don’t)

Now imagine that, but updated for building with AI agents:

Round 1 (3 hours): Build a twitter clone. Or a daily deals site like Woot. Or a Reddit-style forum. You know, something real. Something with users and posts and comments and voting. Something people might actually use. Your AI agent can write all the code.

The Twist (announced at 3 hours): Your twitter clone must now support real-time collaborative editing on posts. Or you daily deals site needs a live auction system. Or your Reddit clone has to handle threaded voice replies.

If your architecture is solid? The agent handles it. Maybe forty-five minutes of refactoring some new models, wire it up, done.

If you’ve been cowboy coding at agent speed? You might spend the next three hours in hell.

The skill is control at velocity. Knowing what to specify and what to leave flexible. Building with agents in a way that doesn’t paint you into corners.

It’s still a hackathon. Still fast. Still pressure. Still about what you can ship before time runs out.

Just… different things break now.

I’m Not Sure About Any Of This

Maybe I’m wrong. Maybe this was just one weird hackathon where the stars aligned. Maybe next time the AI agent will spend 6 hours stuck on some dependency issue and we’ll be back to traditional debugging.

Or maybe this is the new normal and I just haven’t processed it yet.

I keep thinking about our experience playing with the sliders. We were testing Photoslider, and I uploaded a photo of a sunset. The AI came back with “warmth: 8/10, tranquility: 6/10, drama: 4/10.”

And I thought: that’s not wrong.

It’s not right in any objective sense though either. How do you measure the tranquility of a photo of a sunset? But it’s not wrong either. It gave me levers to explore. Handles on something ineffable.

I turned warmth down to 4 and drama up to 9. The new image came back darker, stormier, with more contrast. Less “peaceful sunset,” more “the world is ending beautifully.”

Was that what “drama: 9/10” means? I have no idea. But it gave me something I liked.

Where This Might Go

Here’s what I’m thinking;

Near future (6 months): Hackathons start splitting. Traditional “you must write the code yourself” and Experimental “use whatever tools you want, we’re judging the result.”

Medium future (2 years): The experimental track doesn’t really even need to ban manual code-writing. It does require a wild twist though, forcing you to prove your architecture can flex. The thing that wins isn’t always the prettiest demo, sometimes it’s the one that handled “now add real-time collaborative editing” halfway through without collapsing into spaghetti.

Far future (5 years): We’ll have established patterns for “agent-proof architecture.” Like how we have design patterns now, but for working with AI that codes. The hackathons test whether you know them. Whether you can apply them under pressure. Whether you can feel when the agent is about to paint you into a corner and course-correct before it happens.

Or maybe none of that happens and this was just a weird Thursday at Google.

The Pitch

But lets see. If we decide to run this, would you be interested?

I’m calling it The Iron Vibecoder (working title, names are hard).

Here’s the shape:

Small scale first. Maybe 8 teams. Maybe just people who read this and think “that sounds delightfully unhinged.”

6 hours total. Project revealed at start (build a Twitter clone, or a deals site, or a forum, you know, something real). Twist announced at 3 hours.

AI agents expected. If you’re typing all the code yourself, you’re doing it wrong.

Judging borrowed from cooking shows. Does it work? Did you handle the twist? Does it cohere? What’s delightful about it?

A host who’s way too excited about the whole thing. (Probably me. I’m sorry in advance.)

The point is to test a different skill: Can you maintain architectural sanity while building at 10x speed?

When the twist comes (”now add real-time features” or “now support collaborative editing” or “now it needs a marketplace”) we’ll see who architected well and who just yolo’d their way through the first three hours.

Original video on Sora: https://sora.chatgpt.com/p/s_68e13b74cbc881918e7b11057aea50c8

Would it work? No idea. Would it be fun? I think so. Would we learn something about where hackathons are going? Yeah, probably.

If this sounds interesting, let me know. Reply. DM me. Whatever.

I’m looking for:

Participants who want to test their agentic coding skills under weird constraints

Judges with opinions about what makes software feel coherent vs. spaghettified

Twist ideas (what’s a good mid-hackathon pivot that tests architecture without being impossible?)

Better names than “Iron Vibecoder” (please)

Sponsors who think this weird experiment is worth supporting

This might be a disaster. The twist might be too hard. The judging might be too subjective. Someone might spend the whole time arguing with their AI about whether to use WebSockets or Server-Sent Events.

But that’s what experiments are for.

We built Photoslider by describing it into existence. We explored interfaces through sliders measuring abstract concepts. We did it at a hackathon with a browser that has AI built in.

The tools changed. The skills that matter changed. Maybe the format should change too.

Let’s see what happens when we optimize for architectural coherence under agent velocity instead of raw coding speed.

The clock’s still ticking. The pressure’s still real. But what breaks is different now.

Love it! I thought about this same idea a while ago: https://snarfed.org/2025-01-23_54543 . So great to see that someone actually tried it out and built it!

I'm in!