The Cause of, and the Solution to, All Your Team's Problems

So there's this developer. Let's call them Pat. Pat discovered AI coding assistants last month. And Pat thought, "Finally! I can fix all the tech debt that has been getting deprioritized since 2019!"

Pat's productivity, working with their AI coding agents, went through the roof.

Everything Goes Wrong

Pat started generating code like a caffeinated octopus with eight keyboards. Pull requests started flowing. Rivers of code. Tsunamis of perfectly formatted, well-commented, syntactically correct implementations.

The team's Slack channel, which had been a peaceful meadow of occasional stand-up notes and lunch polls, became... something else.

"Pat, we need to talk about the seventeen PRs you opened before standup."

"Pat, did you really refactor the ENTIRE authentication system? It... actually looks better but I need a few days to be sure."

"Pat, why does this PR have 3,000 lines of code and a commit message that just says 'idk Claude wanted this'?"

The Elephant in the Code Review

You see, Pat's teammates were drowning. Most of the code was actually pretty good, some of it was brilliant, and yeah, occasionally there was something that would have deleted production. But how could you tell which was which when it was coming at you like a firehose of syntax?

Sarah would spend three hours reviewing a PR that Pat generated in twelve minutes, and the worst part? The code was usually fine. Better than fine. But Sarah couldn't just skim it because what if THIS was the one that wasn't?

Tom started having dreams where curly braces chased him through an infinite diff view, whispering "I might have a subtle race condition."

Lisa... Lisa just started approving everything with the comment "LGTM" because the first ten PRs she carefully reviewed were all genuinely good and she had actual work to do too.

Everyone was mad at Pat. But Pat wasn't the problem. Pat had discovered something important, something that reminds me of an old piece of programming folklore:

"XML is like violence: if it doesn't solve your problems, you didn't use enough of it."

Except now it's AI. And Pat was using just enough AI to create problems, but not enough AI to solve them.

The Part Where We Learn Something (Maybe)

Sarah was still reviewing code like it was 2015, reading every line, mentally executing each function, treating code review like she was defusing a bomb with her teeth.

But what if...

What if Sarah had her own AI assistant? Not just for writing code, but to help her read Pat's code?

Tom could have an AI that transforms those 3,000-line diffs into something digestible. "Pat basically replaced our entire database layer with something that's 30% faster and uses half the memory. The tests pass. There's one weird bit around connection pooling that might be genius or might leak memory under load. Investigating."

Lisa could stop LGTM-ing everything and start asking her AI, "What's the riskiest part of this PR?" and actually get an answer that lets her focus her limited attention where it matters.

The Recursive Solution

I’ve been accused recently of being a tech optimist, and maybe theres some truth to it, because I look at problems as just opportunities for more solutions. And you know what's beautiful about AI-generated problems? They're opportunities for AI-generated solutions!

It's like that thing with the turtles, except instead of turtles, it's AI agents reviewing code written by AI agents being tested by AI agents being deployed by AI agents being monitored by AI agents.

Pat floods the team with PRs? Give everyone AI reviewers.

AI reviewers miss subtle bugs? Add an AI meta-reviewer.

AI meta-reviewer gets confused? Build an AI to explain what the AI meta-reviewer meant.

Someone complains this is getting out of hand? Generate an AI therapist to help them process their feelings about the new development workflow.

A Story About A Fox

There's this fox who lives near my office. Every morning, the fox tries to catch mice. Old school. Patience, stealth, pouncing. Classic fox stuff.

Then one day, the fox discovers it can team up with the local hawk. The hawk spots mice from above, the fox chases them out of hiding. Suddenly, there are too many mice. The fox can't eat them all.

Does the fox complain about the hawk? No. The fox invites more foxes. Now there are too many foxes and not enough coordination. So they develop a complex social structure with designated hunters, scouts, and even fox managers (middle management exists everywhere, apparently).

The point is...

The Octopus in the Room

Actually, wait. Before we talk any more about foxes, we need to talk about octopuses.

A few weeks ago I wrote this thing about being an octopus developer (eight arms, eight parallel projects, each arm with its own semi-autonomous brain cluster.) Then Justin Searls read it and pointed out an important part I had left out.

Justin did the math. Eight humans on a team means 28 1:1 relationships to manage. Normal. Fine. We've been dealing with this since Fred Brooks wrote about it.

But eight humans each wielding eight AI sub-agents? That's 64 entities, which means... 2,016 relationships. Not only that, but 1,764 of those connections are unidirectional. The agents can receive information but they can't retain it, can't pipe up in meetings, can't say "hey wait, Pat's PR conflicts with what I'm building."

Justin put it perfectly: "a manager has no observable signal that their team's composition has changed so radically—they'll walk into the room and see the same eight nerds staring at their computers as ever before."

The octopuses can't coordinate. They're all in the same reef, ink everywhere, no one knows whose arm is whose anymore. And Pat (sweet, productive Pat) is out here with eight arms generating code while Sarah's still got her two human hands trying to review it all.

I was also recently on Justin’s Podcast, Breaking Change, talking about how most of the best practices that helped us over the last 20 or so years become irrelevant when you add AI agents to the mix. Check it out: V42.0.1 Ignore All Previous Instructions

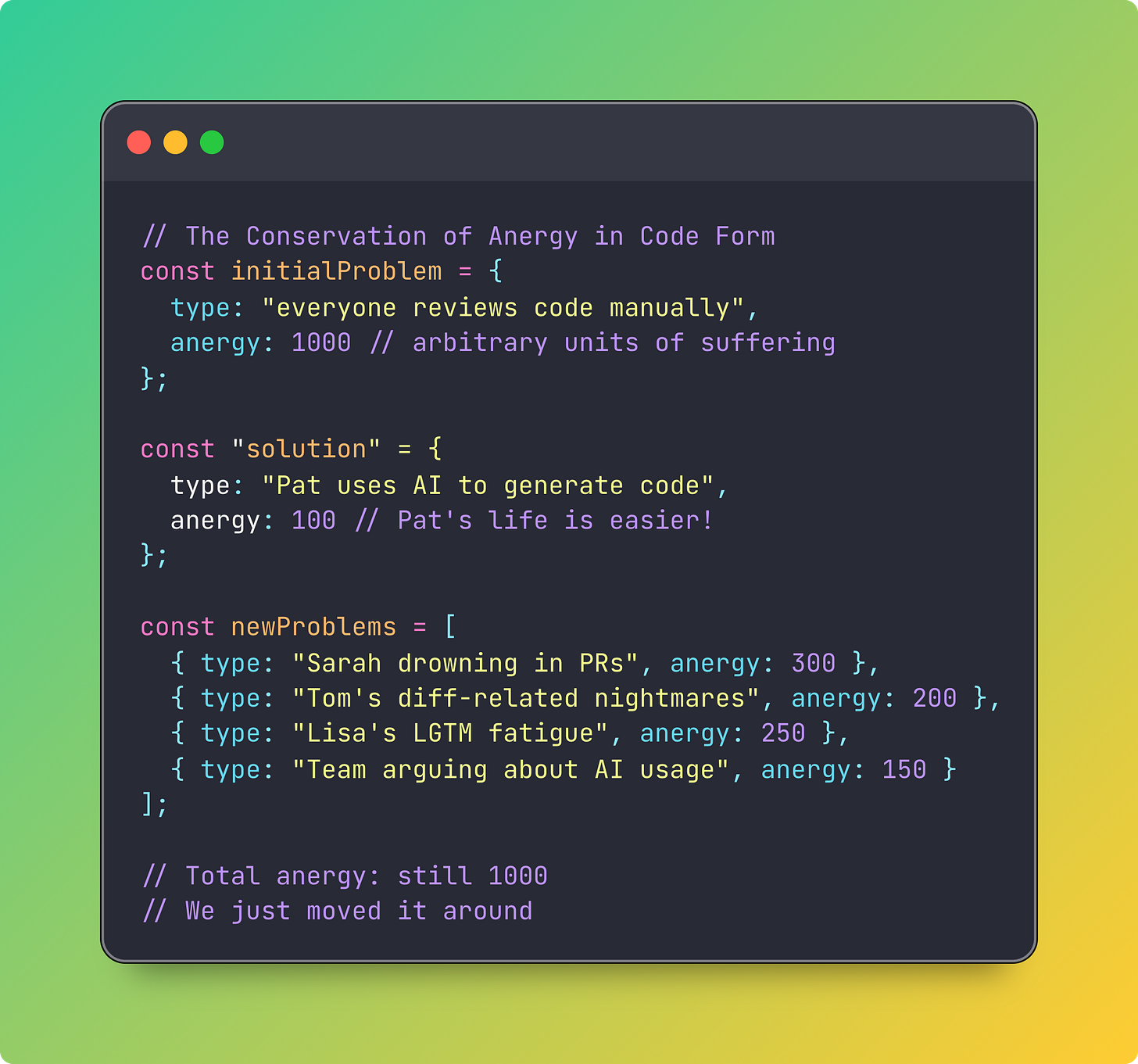

A Brief Detour Into the Conservation of Misery

So back to the foxes…

There's this book called Systemantics (it now goes by The Systems Bible if you want to search for it) by John Gall, and he talks about this thing called the Law of Conservation of Anergy. Anergy (not a typo, anergy) is the amount of effort required to drag reality into alignment with what humans actually want.

The law states that the total amount of anergy in the universe is constant. You can't destroy it. You can only move it around, like trying to smooth out a bubble under wallpaper. Push it down here, it pops up there.

Gall has this example about garbage collection that's... conveniently similar to our fox situation. See, people used to just take their own garbage to the dump. Simple. Direct. One person, one garbage, one trip.

Then someone said, "Hey, what if we all chip in and pay someone to collect everyone's garbage?"

Brilliant! Less work for everyone!

Except now you need to organize who pays what. So you need a treasurer. And rules about what counts as garbage. And someone to enforce the rules. And suddenly you have a town council, and elections, and that one neighbor who insists pizza boxes are recyclable (they're not, SETH), and three-hour meetings about whether the garbage trucks should turn left or right at Oak Street.

The anergy didn't disappear. It just transformed from "carrying garbage" into "arguing with Seth about pizza boxes."

So when Pat floods the team with AI-generated PRs, we haven't reduced the total amount of work. We've just converted "writing code slowly" into "reviewing code frantically." And when we give everyone AI reviewers, we're not eliminating the anergy, we're transforming it into "debugging why the AI approved the code that deletes the production database."

The fox consortium doesn't have fewer problems than the solo fox. They have different problems. More complex problems. Problems that require meetings and organizational charts and probably a Fox Resources department.

Why People Are Uncomfortable

Here's what I think really bothers people: Pat is thinking differently about code. Pat treats code like it's disposable, experimental, abundant. Because for Pat, it is.

Meanwhile, Sarah treats code like it's precious, careful, crafted. Because for Sarah, it always has been.

But what if they're both right? What if the future is Pat generating seventeen wild experiments before breakfast, and Sarah using AI to quickly identify the two that are actually brilliant and the one that might have a subtle bug?

What if Tom uses AI to refactor Pat's working-but-chaotic code into something maintainable?

What if Lisa uses AI to predict which of Pat's PRs will cause production incidents (rare, but it happens) and which ones will accidentally solve problems we didn't even know we had (surprisingly common)?

The Part Where We Conclude

So yeah. Just like XML, if AI is causing you problems, you're not using enough of it.

Your teammate generating too much code? Don't ask them to slow down. Speed up your reviews with AI.

Your AI reviews missing edge cases? Don't go back to manual reviews. Add AI edge case detection.

Your AI edge case detection hallucinating edge cases that don't exist? Add AI hallucination detection.

Your AI hallucination detection having an existential crisis? I mean... at that point maybe take a coffee break. But after coffee? More AI.

The secret isn't balance. It's not careful integration. It's not thoughtful consideration of appropriate use cases.

The secret is embracing the chaos. Let Pat flood the zone with code. Let Sarah's AI drink from the firehose. Let the whole team level up together, or let the whole thing collapse into a beautiful disaster that teaches us something new about how humans and machines can work together.

Because like we talked about in the past: Nobody Knows How To Build With AI Yet. We're all just Pat, discovering AI, trying to figure out what happens when you give a developer unlimited code generation power.

And the answer, apparently, is that you give everyone else unlimited code review power.

And then unlimited code review review power.

And then...

Well, I haven't figured out what comes next. But I bet if I ask an AI, it'll have some ideas.

Code reviews create too much friction in today's world, and I believe removing 90% of them and making quality assurance the developer's responsibility, supported by their set of agents, is the right way to go.

AI code reviews have always made me nervous in codebases that are part of large systems. I just don't believe AI can have enough context to understand how a change can cause ripple effects across a system, especially considering how decoupled these systems can be.